As I’ve discussed in the intro , research in pediatric occupational therapy (especially sensory processing) is complicated. The population with which we work is so legitimately individualized and case by case. So many factors contribute to a child’s sensory processing and motor functioning, that creating homogenous groups make it challenging to design and implement high quality research investigations. Alas, we must embrace that providing evidence for what we have seen work in our sessions is a unique and challenging task.

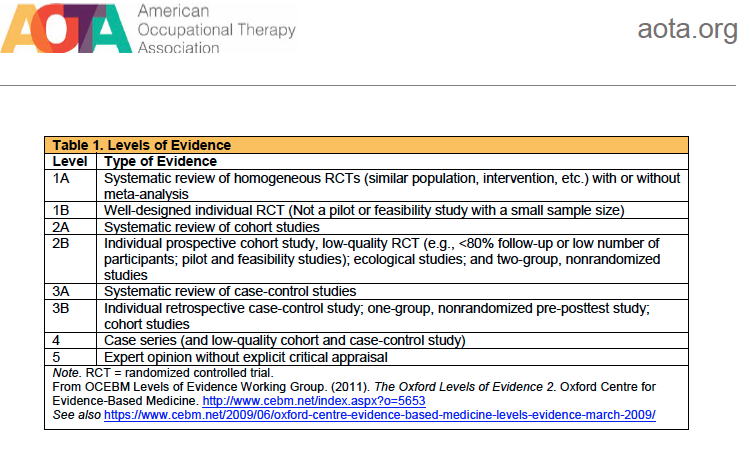

I think many of us become tempted to rely very heavily on personal experience of something working for our client. I don’t think we should deny that information, but I do think we need to be mindful of the quality of this evidence. It is at best a level 5, qualified as expert opinion yet to be assessed critically per AOTA’s Levels of Evidence. The chart below outlines these levels which people use to submit systematic reviews to AOTA (American Occupational Therapy Association, 2020).

Levels of Evidence

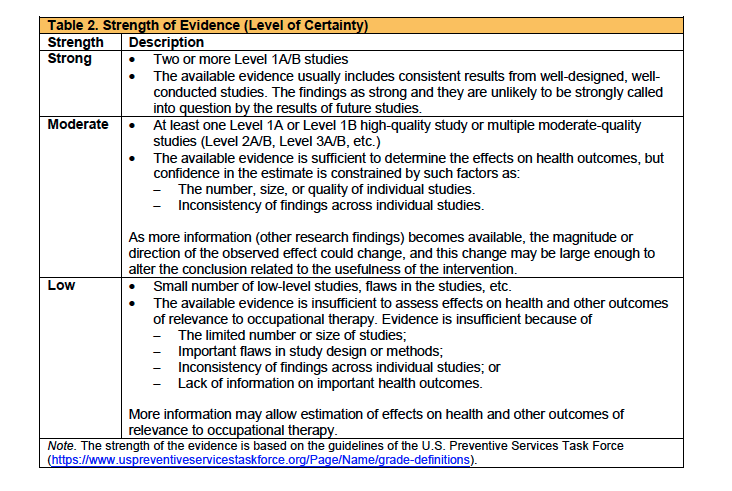

Also relevant when reading and interpreting research is the confidence of the impact and applicability of the research. For these purposes I’ll highlight the limitations from the article and offer my interpretation of how that may impact the use of the information for your practice.

Journal Credibility

Regarding credibility of journals, there are wide opinions on the reliability of metrics for journal ranking. Some metrics rely on the number of times a journal is cited within a specified period of time, such as over 2-3 years. However, biases within research communities and how these metrics are calculated lead some to believe the information remains relative and not a measurable reflection of quality.

Source Normalized Impact Per Paper (SNIP) Score

For the purposes of this blog, I’ll be using the Source Normalized Impact Per Paper (SNIP) Rating. The SNIP rating allows for comparison of impact factor across disciplines as this rating considers different practices used within a field regarding publication and citations (Elsevier). For example, if within one field there are many more journals and many more articles published as compared to a field with a smaller number of publications, the difference between practices for those fields is accounted for.

SNIP is calculated with the following formula: SNIP= RIP (raw impact per paper . DCP (database citation potential)

Where…RIP is “the average number of times a journal’s publications from the 3 previous years were cited in the year of analysis” and DCP is “the average number of active references in the publications belonging to a journal’s subject field” (Waltman, L. eta al., 2012).

For you fellow visual learners…

SNIP= Average number of times a journal’s publications from the previous three years were cited in the year of analysis/Average number of active references in the publications belong to a journal’s subject field

SNIP values are available free to the public at https://www.scopus.com/sources.uri.

In sum…

Phew. That felt intense. The point is to give you some groundwork understanding of the measure being used to assess the impact of the journals and articles mentioned. Once we get rolling, I hope the information will be more straightforward, but as any OT knows, you have to have a strong foundation to have quality outcomes. (Proximal stability leads to distal control, anyone?)

References

American Occupational Therapy Association (AOTA). (2020, March) Guidelines

for Systematic Reviews. Retrieved March 31, 2020, from http://ajot.submit2aota.org/journals/ajot/forms/systematic_reviews.pdf

Elsevier. (n.d.). Measuring a journal’s impact. Retrieved April 1, 2020, from

https://www.elsevier.com/authors/journal-authors/measuring-a-journals-impact

University of Maryland. (n.d.) Research Guides: Bibliometrics and Altmetrics:

Measuring the Impact of Knowledge: SNIP (Source Normalized Impact per Paper). Retrieved April 1, 2020, from https://lib.guides.umd.edu/bibliometrics/SNIP

Waltman, L. et al. (2012). Some modifications to the SNIP journal impact

indicator. Published in arXiv.org